LiDAR technology is a game-changer for self-driving cars, giving them the ability to “see” and navigate their surroundings with incredible precision. This smart sensing system creates detailed 3D maps of everything around the car, helping autonomous vehicles make quick, accurate decisions on the road. As we move closer to fully self-driving cars, LiDAR keeps getting better—smaller, more efficient, and less expensive. Let’s take a closer look at how LiDAR works in self-driving cars, how it teams up with other sensors, what challenges it still faces, and where this technology is headed next.

In this article

- 1 What is LiDAR, and Why is it Essential for Autonomous Driving?

- 2 The Science Behind LiDAR: How it Works

- 3 LiDAR Hardware Components and Configuration

- 4 From Raw Data to Actionable Intelligence

- 5 Object Detection and Classification

- 6 LiDAR in Sensor Fusion Systems

- 7 Integrating with Cameras and Radar

- 8 Fusion Approaches: Early vs. Late

- 9 Challenges and Limitations of Current LiDAR Technology

- 10 Environmental and Performance Limitations

- 11 Innovations and Future Developments

- 12 Enhanced Processing Solutions

- 13 Conclusion

What is LiDAR, and Why is it Essential for Autonomous Driving?

LiDAR (Light Detection and Ranging) is a sensing technology that measures distances using laser light. In self-driving cars, it works like a super-powered “eye” that constantly scans the environment, building precise 3D pictures of everything around the vehicle. It works by shooting out laser pulses and measuring how long it takes for those pulses to bounce off objects and come back.

Unlike us human drivers, who mainly rely on what we can see, self-driving cars need something more reliable and precise to stay safe on the road. LiDAR brings several big advantages:

- It measures distances with amazing accuracy (down to the centimeter).

- It works in all lighting conditions, even in total darkness.

- It creates highly detailed 3D maps of the surroundings.

- It updates these maps many times every second.

For autonomous cars, LiDAR provides the spatial awareness needed to spot obstacles, find safe paths, and make driving decisions with confidence. This capability is essential for moving beyond basic driver assistance to truly autonomous driving.

The Science Behind LiDAR: How it Works

Basic Operating Principles

At its heart, LiDAR uses a pretty straightforward concept called time-of-flight. A laser unit sends out short pulses of light (usually infrared), which travel outward until they hit something. When the light bounces off a surface, it reflects to the LiDAR sensor. A computer then calculates exactly how long this round trip took.

Since light travels at a certain speed (approximately 299,792,458 meters per second), figuring out the distance is simple:

Distance = (Speed of Light × Time of Flight) ÷ 2

We divide by two because the light has to travel to the object and back. This process happens millions of times per second, creating thousands of measurement points that together form what’s called a “point cloud.”

Creating 3D Point Clouds

The real magic of LiDAR is that it turns all of these individual measurements into a detailed 3D picture of the world. As the laser pulses are fired in different directions (either by physically rotating them or by an electronic steering wheel), each returning pulse adds another point to the 3D map.

Today’s automotive LiDAR systems can generate millions of points every second, each with precise X, Y, and Z coordinates. This creates a detailed digital snapshot that can distinguish between:

- Cars and trucks with their exact dimensions

- People walking and cyclists

- Road edges and lane markings

- Traffic signs and signals

- Buildings and other structures

- Trees, bushes, and other features

For self-driving cars, this point cloud data provides vital information about where objects are, how the road is shaped, what obstacles might be ahead, and where the car can safely drive.

Accounting for Vehicle Movement

Roads aren’t perfectly smooth, and when a car moves, it naturally rocks side to side. This means a laser beam fired straight from the top of the car might not be level with the road. Getting these angles right is super important when measuring what’s around the car.

That’s where the Inertial Measurement Unit (IMU) comes in. The IMU tracks how the LiDAR unit is oriented and moving, allowing the system to adjust for the car’s motion when building its map. This ensures that small angle changes—which could mean the difference between stopping in time or hitting another car—are correctly factored into the distance calculations.

LiDAR Hardware Components and Configuration

Core Components of Automotive LiDAR Systems

A typical car LiDAR system includes several key parts:

- Laser emitter: Creates the infrared light pulses used for measurements.

- Optical components: Guide the laser pulses outward in specific patterns.

- Photodetector: Catches the returning light signals.

- Timing circuits: Measure the exact time between sending and receiving pulses.

- Processing unit: Converts time measurements into distances and builds point cloud data.

- Scanning mechanism: Aims laser pulses to cover the entire field of view.

These components work together to create a sensing system that can map the car’s surroundings with remarkable detail and accuracy.

Common Mounting Configurations

If you’ve spotted an autonomous vehicle, you’ve probably noticed that spinning cylinder on its roof. That’s the most visible and traditional LiDAR setup, giving a full 360° view around the car. But as LiDAR evolves, we’re seeing different mounting approaches:

- Roof-mounted systems: Give the best overall view but stick out visually.

- Bumper-integrated units: Look better but see less of the surroundings.

- Multi-LiDAR arrays: Place smaller sensors all around the vehicle for complete coverage.

- Behind-windshield mounting: Hides LiDAR more seamlessly into the car’s design.

The specific setup depends on how autonomous the vehicle needs to be, design constraints, and what the car is meant to do. Fully self-driving cars typically need more complete coverage than cars with just some automated features.

From Raw Data to Actionable Intelligence

Processing Point Cloud Data

The huge amount of data from LiDAR systems creates significant computing challenges. A typical 64-channel sensor produces over 2 million points every second. Because of this extra spatial dimension, cutting-edge 3D models need 14 times more computing power than 2D image processing.

Before raw point cloud data becomes useful for driving decisions, it goes through several steps:

- Filtering out noise and incorrect readings

- Grouping points that belong to the same objects

- Identifying what each object represents

- Monitoring how objects move over time

These processing steps transform raw distance measurements into structured information about the vehicle’s surroundings that can guide navigation decisions.

Object Detection and Classification

One of the most critical functions of LiDAR in self-driving cars is how it spots and identifies objects. Smart algorithms look at point cloud patterns to recognize different types of road users and obstacles with impressive accuracy. Today’s systems can spot and classify objects over 200 meters away, giving crucial early warnings about potential hazards.

The system can tell the difference between:

- Moving vehicles and where they’re heading

- Pedestrians and cyclists

- Stationary obstacles like poles and barriers

- Road edges and lane markings

This ability to classify objects is key for predicting how different things might behave and planning the right responses.

LiDAR in Sensor Fusion Systems

Why Multiple Sensors Are Necessary

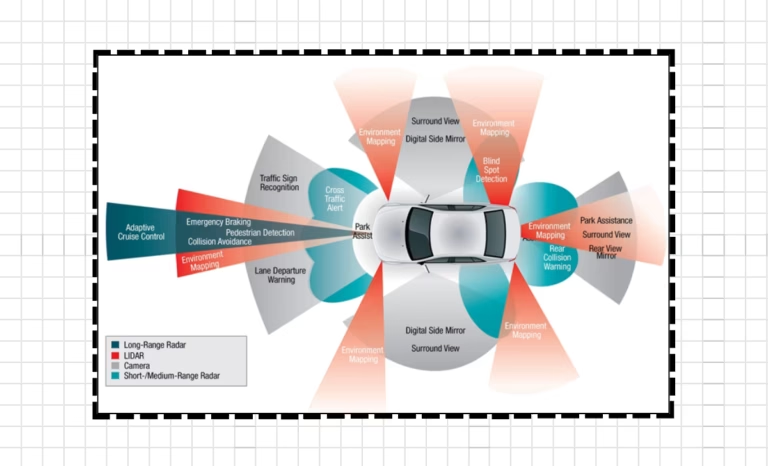

While LiDAR gives exceptional spatial awareness, it has its limits that make a multi-sensor approach necessary. For instance, LiDAR can’t see colors, read signs, or interpret traffic lights. That’s why self-driving cars typically combine LiDAR with other sensing technologies.

Sensor fusion is crucial for autonomous vehicles, with LiDAR playing a major role in both perception and localization systems. Both these areas need multiple sensors to work effectively:

- Perception systems combine cameras, LiDARs, and RADARs to figure out what obstacles are, where they are, and how fast they’re moving.

- Localization systems blend GPS, LiDAR, and camera data to pinpoint the vehicle’s exact position down to centimeter-level accuracy.

Integrating with Cameras and Radar

Each type of sensor brings unique strengths to the table:

Cameras (2D sensors) are great at:

- Visually recognizing objects, signs, and markings.

- Spotting colors and interpreting visual cues

- Reading text on signs and signals

- Working in visually complex environments

LiDAR (3D Sensors) provides:

- Exact spatial mapping and distance measurement

- Functionality in low-light conditions

- Detailed object dimensions and positioning

- 3D point clouds with coordinate data

Radar offers:

- Performance in all weather conditions

- Direct speed measurements

- Long-range detection abilities

- Reliable operation when conditions get tough

Fusion Approaches: Early vs. Late

In sensor fusion, engineers typically use two main approaches:

Early fusion combines raw data from different sensors before processing it. For example, projecting LiDAR point clouds onto 2D camera images to check if point clouds belong to specific objects in the image. This approach gives comprehensive results but needs serious computing power.

Late fusion processes each sensor’s data separately before combining the results. For instance, a camera might identify something as a pedestrian while LiDAR confirms where they are and where they’re going. This approach is more efficient computationally but might miss connections visible in the raw data.

Both approaches have their merits, and many advanced self-driving systems use a mix of both early and late fusion strategies to get the best performance.

Challenges and Limitations of Current LiDAR Technology

Data Processing Demands

A major downside of LiDAR is its massive 3D data that’s computationally intensive. Processing all this data in real time needs serious computing muscle. To navigate effectively, engineers often have to flatten the data into 2D representations, which means losing a lot of information.

A team from MIT has been tackling this problem by developing a self-driving system that uses machine learning to handle raw 3D point cloud data more efficiently. Their end-to-end framework can navigate autonomously using only raw 3D point cloud data and basic GPS maps. By optimizing both their algorithms and systems, they’ve achieved a significant speed boost compared to existing 3D LiDAR approaches.

Environmental and Performance Limitations

LiDAR systems face several practical challenges:

- Weather vulnerability: Rain, snow, and fog can scatter laser beams, cutting down range and accuracy.

- Surface material issues: Very shiny or light-absorbing materials can be tricky to detect reliably.

- Range constraints: Though getting better, detection range might not be enough for high-speed driving.

- Interference concerns: Multiple LiDARs operating close to each other might interfere with one another.

These limitations show why approaches that combine LiDAR with complementary tech like radar and cameras are essential for robust self-driving systems.

Cost and Integration Challenges

Historically, high costs have limited widespread LiDAR adoption in production vehicles. Early automotive LiDAR units could cost upwards of $75,000, making them way too expensive for mainstream cars. While prices have dropped considerably in recent years, cost remains a big factor for car manufacturers.

Also, the distinctive look of traditional roof-mounted LiDAR units creates aesthetic challenges for consumer vehicles. Manufacturers are increasingly focused on more integrated designs that look good while maintaining LiDAR functionality.

Innovations and Future Developments

Solid-State LiDAR Technology

The future of automotive LiDAR likely involves solid-state systems without moving parts. Unlike traditional mechanical LiDAR with spinning components, solid-state LiDAR uses electronic beam steering that offers several advantages:

- Better reliability with fewer mechanical parts to break

- Potentially much lower production costs

- Smaller sizes that blend more seamlessly into vehicle designs

- Less power use and heat generation

These advances are making LiDAR more practical for mass-market use while expanding what autonomous systems can do.

Enhanced Processing Solutions

Researchers are developing smarter ways to handle the massive data streams from LiDAR systems. MIT’s team, for example, has created deep learning components that make better use of modern GPU hardware to control vehicles in real time using raw LiDAR data.

These processing improvements allow self-driving systems to:

- Get more information from point cloud data.

- Make driving decisions with greater confidence.

- Work more reliably in tough conditions.

- Need less human intervention.

In testing, these enhanced systems have shown they can reduce how often a human driver needs to take over and can even handle severe sensor failures.

Conclusion

LiDAR technology has established itself as a foundational component of advanced autonomous driving systems, providing the precise environmental perception necessary for safe navigation in complex environments. By emitting laser pulses and measuring their return time, LiDAR creates detailed 3D representations of a vehicle’s surroundings that guide critical driving decisions.

While challenges remain—particularly regarding cost, weather resilience, and data processing demands—rapid innovations are addressing these limitations. The integration of LiDAR with complementary sensing technologies through sophisticated fusion approaches creates robust perception systems that exceed what any single sensor could provide.

As LiDAR technology continues to evolve, becoming more compact, affordable, and capable, it will play an increasingly vital role in the advancement of autonomous vehicles. For automotive professionals and technology enthusiasts alike, understanding LiDAR’s capabilities and ongoing development provides valuable insight into one of the cornerstone technologies enabling the autonomous transportation revolution.