Sensor fusion is the backbone of autonomous driving technology. It combines data from multiple sensors to create a complete real world picture of what’s around the vehicle. By mixing LiDAR, radar, and cameras together, self-driving cars can “see” their surroundings way better than they could with just one type of sensor. This smart combination helps autonomous vehicles navigate tricky environments safely, even in conditions that would throw off individual sensors. This smart combination helps autonomous vehicles navigate tricky environments safely, even in conditions that would throw off individual sensors, ultimately making the roads safer for everyone.

Understanding the Core Sensing Technologies

Before we get into sensor fusion, let’s look at what each main sensor brings to the table and where they fall short. By understanding the strengths and limitations of each sensor, we can better appreciate the importance of sensor fusion in creating a comprehensive and reliable system for navigating tricky environments.

LiDAR: The 3D Mapping Specialist

LiDAR (Light Detection and Ranging) creates detailed 3D maps by shooting out laser pulses and measuring how long they take to bounce back. This technology gives autonomous vehicles some serious advantages:

- Measures distances with precision down to centimeters, which is crucial for navigating complex environments.

- Creates incredibly detailed 3D point clouds of everything around them.

- Works well in all lighting conditions, even total darkness with the use of lidar sensors.

- Accurately figures out the size and shape of objects for improved object recognition and avoidance.

But LiDAR isn’t perfect. Traditional mechanical LiDAR systems have been super expensive (though prices are dropping). They also don’t work as well in bad weather like heavy rain, snow, or fog, since these conditions scatter the laser beams.

Radar: The All-Weather Performer

Radar uses radio waves to spot objects and directly measure how fast they’re moving. Unlike light-based systems, radar shines in tough weather conditions, making it a must-have in the autonomous sensing toolkit.

The big benefits of radar include:

- Reliable performance in rain, snow, fog, and other nasty weather conditions.

- Direct measurement of object speed through the Doppler effect and the ability to penetrate through obstacles like dust and smoke.

- Long-range detection, often seeing more than 200 meters ahead with high accuracy.

- Lower cost and power needs compared to LiDAR make radar a preferred choice for autonomous vehicles.

The downside? Radar typically has lower resolution than LiDAR and cameras, so it’s not as good at distinguishing between objects that are close together or spotting smaller items. This limitation can be mitigated by combining radar with other sensors.

Cameras: The Visual Context Providers

Camera systems deliver the richest meaningful information of all autonomous vehicle sensors, capturing visual data much like human eyes do. With advanced computer vision algorithms, cameras enable:

- Object recognition and classification, lane detection, traffic sign recognition, and pedestrian detection.

- Lane detection and reading road markings are critical for autonomous vehicles to navigate safely and effectively.

- Traffic sign and signal recognition are crucial for the vehicle to understand and obey traffic laws and regulations.

- Color and texture identification are important for object detection and pedestrian detection.

But cameras have their weak points too. They heavily depend on good lighting and can’t directly measure distance like LiDAR or radar can. Things like dirt or water droplets on lenses can also seriously hurt their performance.

Why Sensor Fusion is Essential

No single sensor can provide everything needed for safe autonomous driving. Each technology has specific weaknesses that other sensors can help cover.

Complementary Strengths and Weaknesses

When properly integrated, these technologies make up for each other’s shortcomings:

- LiDAR gives precise spatial mapping but can’t read text on signs or understand traffic lights are red, while cameras can capture images and detect text on signs but struggle in low-light conditions.

- Cameras are great at understanding what objects are but struggle with measuring exact distances between objects.

- Radar works reliably in bad weather but lacks the detail needed for complex object identification and is better at detecting the speed and direction of objects.

This complementary relationship makes sensor fusion not just helpful but absolutely necessary for truly autonomous operation. By bringing these different data streams together, vehicles achieve perception abilities that go beyond what any individual sensor could provide.

Enhanced Reliability Through Redundancy

Sensor fusion creates important backup systems in autonomous vehicles. If one sensor starts performing poorly due to environmental conditions or technical problems, others can pick up the slack to keep things running safely.

For instance, when driving through heavy fog:

- Camera visibility drops dramatically, but the LiDAR and radar sensors can still detect obstacles and maintain a safe driving distance.

- LiDAR range might be significantly reduced in heavy fog, but radar sensors can still provide valuable data to the autonomous vehicle.

- Radar continues to provide reliable distance and speed data in various weather conditions.

This redundancy is crucial for safety-critical systems like autonomous driving, where failures could have serious consequences. In addition, radar sensors are cost-effective compared to other sensing technologies.

Sensor Fusion Architectures

Sensor data can be combined at different stages of the perception pipeline, each approach having its own pros and cons. The decision on which architecture to use depends on the specific requirements of the autonomous driving system.

Early Fusion: Combining Raw Data

Early fusion combines data from different sensors at the raw data level, before object detection and classification happen. This approach:

- Lets the system discover subtle connections between sensor inputs and improve overall performance.

- Can achieve higher accuracy by using all available information, which can result in more robust and reliable decisions.

- Requires precise timing and calibration between sensors to ensure accurate data collection.

- Needs substantial computing power to process all the data efficiently and effectively.

A typical early fusion setup might project LiDAR point clouds onto camera images to add spatial data to visual information before processing begins.

Late Fusion: Integration at Object Level

Late fusion processes data from each sensor separately, performing object detection and classification independently before combining results. This method:

- Needs less computing power than early fusion, but may result in lower accuracy.

- Makes system design and sensor integration simpler, but can lead to inconsistencies in the final output.

- Might miss important connections only visible in the raw data they process.

- Offers more flexibility for system upgrades and improvements in the long run.

In a late fusion approach, a camera might identify something as a pedestrian while LiDAR confirms their position and movement, with the results then merged into a unified picture. This approach is beneficial because it combines the strengths of each sensor type to provide more accurate and reliable object detection.

Hybrid Approaches for Optimal Performance

Many real-world autonomous systems use hybrid approaches that combine aspects of both early and late fusion. These practical implementations often use early fusion for critical functions while using late fusion for less important tasks, finding the sweet spot between accuracy and processing efficiency. This allows for more efficient and effective decision-making in real-time scenarios.

Data Integration Techniques

Smart algorithms transform raw sensor data into structured information that guides the vehicle’s decision-making. This results in improved vehicle performance and safety on the road. This ultimately leads to a smoother and more reliable driving experience for passengers.

Point Cloud Processing

LiDAR generates millions of 3D points every second, creating huge data processing challenges. Advanced point cloud processing techniques include:

- Clustering algorithms that group points belonging to the same objects together.

- Ground plane detection to separate fixed infrastructure from moving objects and terrain.

- Object segmentation to identify distinct things in the environment and extract valuable information from the data. Semantic segmentation to classify each pixel in an image into a specific category.

A team from MIT developed an end-to-end framework that processes raw 3D point cloud data efficiently, achieving significant speed improvements compared to traditional approaches while maintaining high accuracy.

Probabilistic Occupancy Grids

One really effective way to combine LiDAR and radar data is through probabilistic occupancy grids—basically maps that track how likely it is that objects exist in different spots in the environment.

This approach:

- Creates one unified view from different sensor inputs and enhances the accuracy of object detection and tracking.

- Handles sensor uncertainty in a mathematically sound way to improve the overall reliability of the system.

- Keeps computational demands manageable while still maintaining high performance.

- Helps detect moving objects reliably in various environments.

AI-Powered Fusion Approaches

Deep learning has completely changed how we handle sensor fusion. Modern approaches now include:

- Neural networks that figure out the best fusion strategies straight from data to improve accuracy and efficiency in object detection tasks.

- End-to-end systems that process raw sensor data and output driving decisions without any intermediate steps.

- Modified detection networks like YOLO paired with tracking algorithms like DeepSORT to create a seamless and robust object detection system.

In one study, researchers combined YOLO object detection with DeepSORT tracking for LiDAR and radar fusion, hitting impressive scores—68.3% AMOTA for tracking and 68.7% mAP for detection.

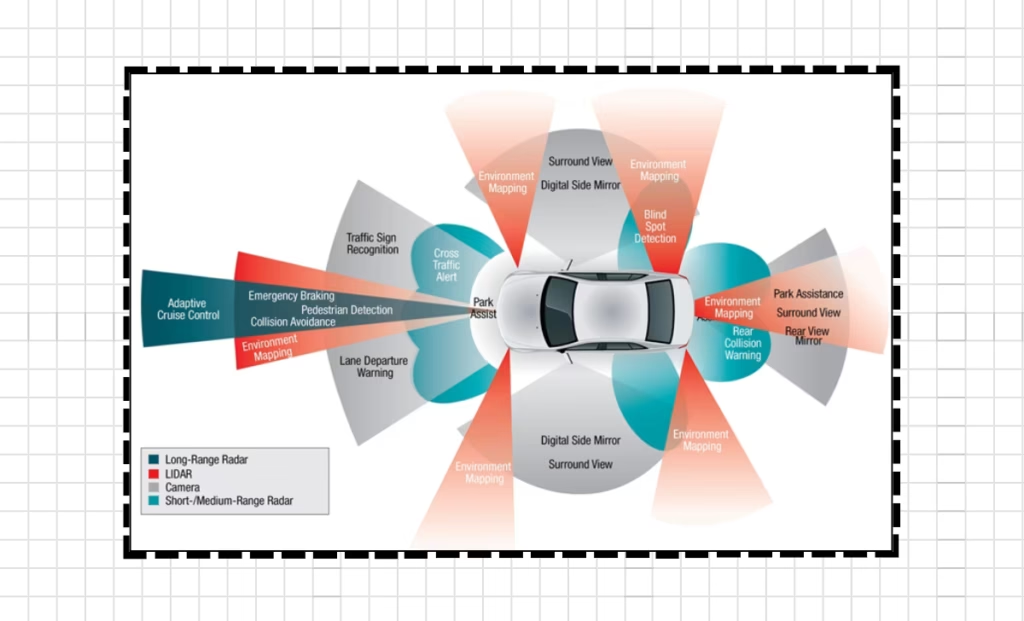

Real-World Applications of Multisensor Integration

Sensor fusion enables the critical perception abilities that self-driving cars need.

Detection and Tracking of Dynamic Objects

One of the most crucial uses of sensor fusion is reliably spotting and tracking moving objects like cars, pedestrians, and cyclists.

By putting together LiDAR’s precise spatial data, radar’s velocity measurements, and a camera’s object recognition, self-driving systems get:

- Better detection rates across different object types and improved overall perception of the surrounding environment.

- More accurate position and speed estimates of surrounding objects.

- Improved predictions of where objects will move next, leading to safer and more efficient autonomous driving.

- Better resilience when individual sensors have problems or fail.

Research using probabilistic occupancy grids to combine LiDAR and radar showed major improvements in object detection and tracking compared to using just one sensor.

Environmental Mapping and Localization

Self-driving vehicles need to know exactly where they are—a challenge that sensor fusion helps solve.

Combined sensing systems improve localization through:

- LiDAR map matching compares current scans to existing maps and adjusts the vehicle’s position accordingly.

- Visual landmarks spotted by cameras that serve as reference points for the vehicle’s location.

- Radar signatures from fixed structures that provide consistent readings for the vehicle to determine its precise location even in challenging conditions such as poor visibility or inclement weather.

This multi-sensor approach can achieve centimeter-level positioning accuracy even when GPS signals get blocked by tall buildings or other obstacles.

Navigation in Challenging Conditions

Perhaps the strongest case for sensor fusion is how it maintains performance in tough conditions.

For example:

- In low light where cameras struggle, LiDAR works fine.

- During rain, when LiDAR range decreases, radar keeps working at full capacity.

- In visually complex scenes where camera understanding complements LiDAR’s spatial mapping radar provides crucial information to fill in the gaps.

This adaptability lets self-driving systems operate across many more driving conditions than any single sensor could handle.

Implementation Challenges

Despite its benefits, sensor fusion comes with significant technical hurdles.

Data Synchronization and Calibration

Different sensors work at different speeds and have varying processing times. LiDAR, radar, and cameras typically have different frame rates and response times—differences that need to be reconciled for accurate data integration.

Precise alignment between sensors is also critical. Even small misalignments can cause major perception errors at distance. Keeping everything calibrated despite vibration, temperature changes, and other factors remains challenging.

Processing Massive Data Streams

The computing demands of sensor fusion are huge. A typical self-driving car generates several gigabytes of sensor data every second, all needing real-time processing.

This challenge has sparked innovations in:

- Special hardware accelerators for sensor fusion that can handle the massive data streams efficiently.

- Streamlined algorithms that reduce processing needs and increase efficiency.

- Selective processing approaches that focus computing power on what matters most.

- Edge computing setups that spread processing across multiple systems to optimize performance and minimize latency.

Integration and Packaging

Moving from research prototypes to production vehicles brings new challenges around packaging, looks, and manufacturability.

Production implementation requires elegant solutions:

- Solid-state LiDAR with no moving parts is essential for achieving long-term reliability and durability.

- Sensors mounted behind windshields or integrated into bumpers are exposed to harsh environmental conditions and require advanced design considerations.

- Camera systems that blend with the vehicle’s design are becoming increasingly popular in the automotive industry.

- Radar arrays built into the vehicle’s front end are also being utilized for advanced driver assistance systems.

These practical issues heavily influence sensor fusion system design, often requiring trade-offs between ideal sensor positioning and real-world constraints.

Future Developments in Sensor Fusion

As self-driving technology matures, several promising trends are emerging.

Emerging Sensor Technologies

While LiDAR, radar, and cameras form the foundation of current systems, new sensing technologies are expanding the possibilities:

- High-resolution 4D imaging radar approaching LiDAR-like capabilities at lower cost, and fusion of multiple sensor modalities to improve accuracy and reliability.

- Event cameras that detect brightness changes within microseconds are also being developed.

- Thermal imaging enhancing perception in darkness and bad weather conditions.

- Short-range ultrasonic sensors for precise maneuvering in tight spaces are also becoming more common in autonomous vehicles.

These emerging technologies will expand the sensor fusion toolkit, enabling more robust perception systems.

Advancements in AI for Sensor Fusion

Artificial intelligence continues to transform how sensor fusion works. Recent developments include:

- Neural networks that learn optimal fusion strategies directly from data improving accuracy and efficiency in processing sensor data.

- End-to-end learning approaches that process raw sensor data directly without the need for manual feature extraction.

- Attention mechanisms that focus processing on the most relevant sensor information for improved decision-making.

- Transfer learning techniques that reduce training data requirements by leveraging knowledge from related tasks or domains.

These AI approaches are showing greater robustness to sensor failures and environmental challenges than traditional hand-designed algorithms.

Simplified System Architectures

While early autonomous development often maxed out sensor capability with expensive, high-resolution sensors, the industry is now exploring minimum viable sensor setups that can still provide safe autonomous driving.

This trend shows up in:

- Strategic sensor placement to get maximum coverage with fewer units while maintaining safety and efficiency.

- AI systems that extract more information from existing sensors and optimize their usage in order to reduce costs and improve performance.

- Greater reliance on high-definition maps to supplement onboard sensing capabilities for better decision-making and autonomous driving.

- Sensor configurations optimized for specific operational domains will lead to more efficient and effective autonomous systems.

These developments align with commercial pressure to cut system costs while maintaining performance—a necessary step for mass-market adoption.

Conclusion

Sensor fusion is the backbone of effective autonomous vehicle perception, helping self-driving systems overcome the limitations of individual sensors to create comprehensive environmental awareness. By combining LiDAR’s spatial precision, radar’s weather resistance, and cameras’ rich visual data, these systems achieve the robust perception capabilities needed for safe autonomous driving. These systems can accurately detect and identify objects in a variety of environmental conditions.

The evolution of sensor fusion continues to speed up, driven by advances in hardware, processing techniques, and artificial intelligence. From probabilistic occupancy grids to deep learning approaches, engineers are developing increasingly sophisticated methods to combine different sensor data into unified environmental representations.

Despite significant progress, challenges remain in synchronization, calibration, data processing, and production implementation. Addressing these challenges requires continued innovation across hardware and software domains, with promising developments already emerging in solid-state sensors, AI-driven fusion, and more efficient system architectures.